- Artificial intelligence continues to advance apace in 2024.

- Policymakers are taking note – but will this year see big strides in AI governance?

- Three industry experts give their insights in the latest episode of the World Economic Forum’s Radio Davos podcast.

If 2023 was the year the world became familiar with generative AI, is 2024 the year in which governments will act in earnest on its governance?

That’s the question put to guests on the latest episode of the World Economic Forum podcast Radio Davos. The following article is based on conversations, recorded at Davos 2024, with:

- Alexandra Reeve Givens, CEO of nonprofit the Center for Democracy & Technology;

- Aidan Gomez, Co-founder and CEO of enterprise AI company Cohere;

- and Anna Makanju, Vice President of Global Affairs at OpenAI, which creates AI products including ChatGPT.

Alexandra Reeve Givens, CEO of nonprofit the Center for Democracy & Technology;Aidan Gomez, Co-founder and CEO of enterprise AI company Cohere;and Anna Makanju, Vice President of Global Affairs at OpenAI, which creates AI products including ChatGPT.

Here are some of their insights – listen to the podcast to hear the full conversations.

The drive for AI governance

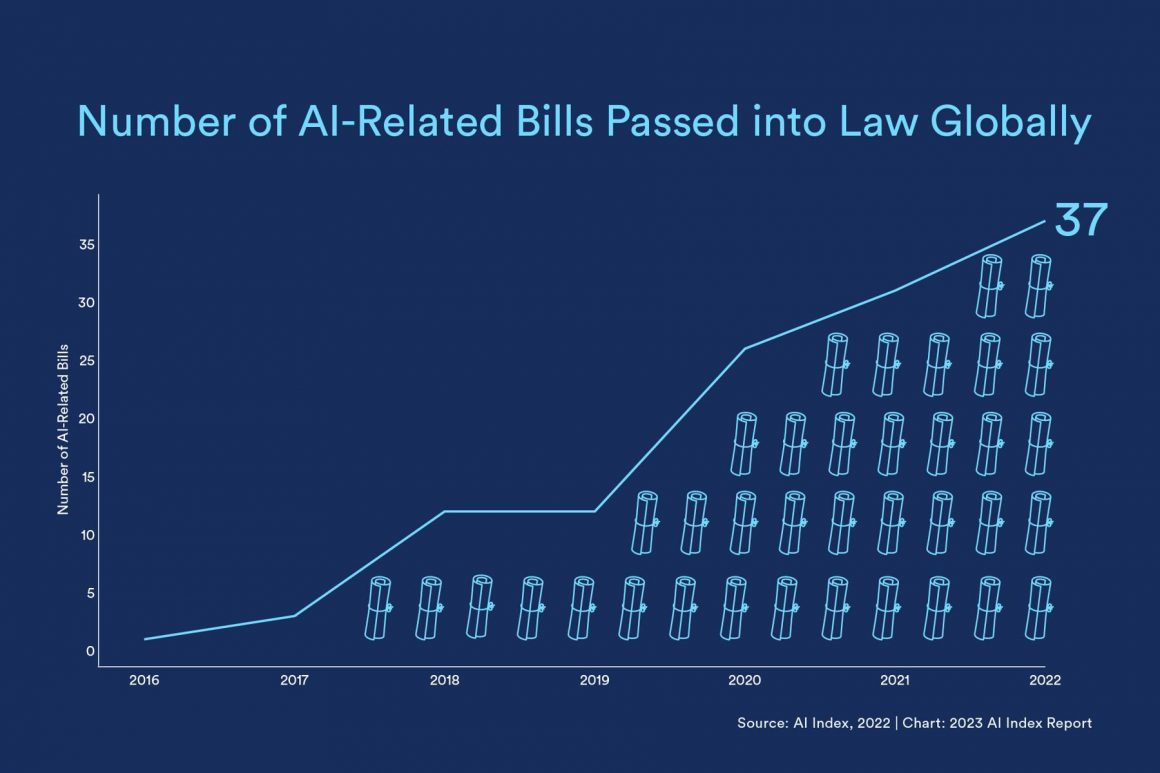

Stanford University’s AI Index shows policymaker interest in AI is rising. Across 127 countries, the number of bills passed into law that reference artificial intelligence grew from 1 in 2016 to 37 in 2022. And the European Union is readying its AI Act, which it calls the world’s first comprehensive AI law.

Cohere’s Aidan Gomez agrees that governance is needed but highlights that “the way we get there is incredibly important”.

“It’s tough to regulate a horizontal technology like language. It impacts every single vertical, every single industry – wherever you have more than one human, there’s language happening.

“We should be regulating it in a vertical layer and helping the existing policymakers, the existing regulators, get smart on generative AI and its impact in their domain of expertise. This will help them mitigate the risks in their context.”

He adds that when it comes to global regulation that international consensus and coordination is needed. And any regulation should work to empower rather than stifle innovation, especially that of smaller companies.

“AI is such a powerful, potent technology that I think that we have to protect the little guy. There have to be new ideas. There has to be a new generation of thinkers building and contributing. And so that needs to be a top priority for the regulators.”

Moving regulation into the ‘action phase’

As AI continues to advance, however, so too does potential for misuse of the technology. And with more than 2 billion voters worldwide heading to the polls in 2024, focus on risks such as disinformation and deep fakes threatening to disrupt the democratic process is growing.

Many of the biggest AI companies have signed the Tech Accord to Combat Deceptive Use of AI in 2024 Elections, a set of commitments to combat fake images, video and audio of political candidates.

But dealing with deep fakes is just one part of the immediate puzzle, according to Alexandra Reeve Givens of the Center for Democracy & Technology. Longer term, she says, it will be important to consider the role economic inequality plays in the survival of democracy.

“For AI, that raises questions not only of job displacement,” she adds. “But also how decisions are made about people who get access to a loan, who’s chosen for a job, whether someone gets approved for public benefits or not.

“AI is seeping into all of those systems in ways that policymakers and companies alike really have to pay attention to.”

Companies, governments and civil society are already engaged in discussion about how to deal with such issues – and for Givens 2024 is the year they must “move into the action phase”.

“We have to go from high-level discussion to actual rubber-meets-the-road laws being written, policies being adopted, new designs being deployed by companies.

“A big divide in 2023 was about whether or not we focus on long-term risks or short-term risks. I would like to think we’ve reached the maturity model now, with people realizing we can and must do both at once.”

Creating a common dialogue

OpenAI’s Anna Makanju advocates for both international consensus on “catastrophic risk” around AI and self-regulation within companies.

Red-teaming, for example, is common practice in the industry, she says. This is a process used in software development and cybersecurity to analyze risks and evaluate harmful capabilities in new systems.

“This is something we’ve done with our models to make sure we can understand what some of the immediate risks will be – how can it be misused? How can we mitigate those risks?”

To share safety insights and expertise, four of the biggest AI companies have formed the Frontier Model Forum. Through this forum, Anthropic, Google, Microsoft and Open AI have committed to advance AI safety research and forge public-private collaborations to ensure responsible AI development.

“It is about identifying a common set of practices,” Makanju says, adding that this work also feeds into regulation by helping to identify where thresholds should be set. “At the end of the day, the companies that are building these models know the most about them. They have the most granular understanding of all the different aspects. And I don’t think anyone should trust companies to self-regulate. But I do believe that it’s necessary to have this dialogue, to have regulation that’s actually going to be robust.”

The World Economic Forum’s AI Governance Alliance provides a forum for industry leaders, governments, academic institutions and civil society organizations to openly address issues around generative AI to help ensure the design and delivery of responsible AI systems.

By: David Elliott (Senior Writer, Forum Agenda)

Originally published at: World Economic Forum

Source: cyberpogo.com